Nvidia and Google Intensify Their AI Chip Rivalry as Reports Highlight Meta’s Interest

The global race to supply the next generation of artificial intelligence chips has taken a sharper turn as new reports suggest Meta Platforms is considering a large-scale purchase of Google’s tensor processing units. According to The Information, Meta is in discussions to spend billions on Google’s AI hardware, a move that would indicate shifting momentum in a market long dominated by Nvidia. If confirmed, the deal would represent one of the strongest endorsements yet of Google’s chip development program and could meaningfully reshape competitive dynamics in the high-performance compute sector.

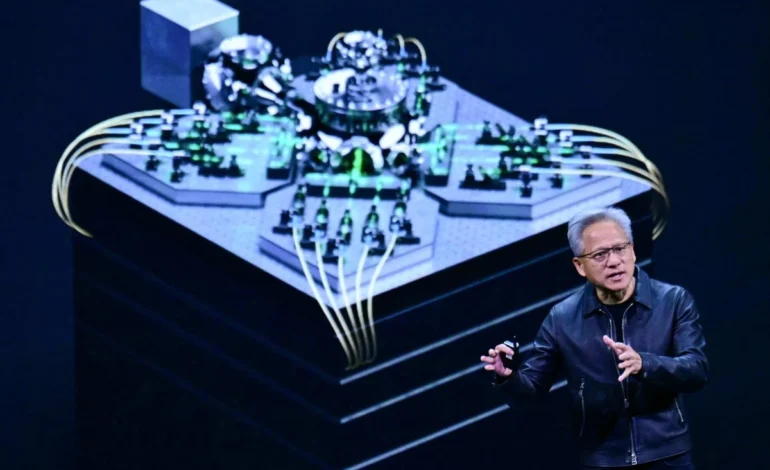

The reported negotiations arrive during a period where major technology firms are facing unprecedented demand for computational resources. Training large language models and deploying multimodal AI systems now requires enormous volumes of specialised hardware. For years, Nvidia has been the default supplier for these workloads. Its GPUs anchor the data centers of nearly every major AI laboratory and enterprise technology platform. But Google’s recent commitment to commercialising its in-house TPU line has opened the possibility of a more diversified market.

Meta’s potential shift signals confidence in alternative architectures

Meta’s interest in Google’s chips reflects the company’s broader challenge of securing enough compute power to scale its AI ambitions. The firm has announced increasingly complex foundation models and continues to expand its generative AI products across social platforms. This growth requires a stable supply of accelerators capable of supporting extensive training cycles. While Meta remains one of Nvidia’s largest customers, it has also explored alternative hardware to improve efficiency and reduce supply-related risk.

Google’s TPUs represent a different design philosophy from Nvidia’s GPUs. They are purpose built accelerators optimised for matrix operations common in deep learning tasks. Their tight integration with Google’s data center architecture and software ecosystem has given them strong performance in internal workloads. Meta’s evaluation of these chips suggests that TPUs are becoming attractive not only for Google but for external customers seeking large scale, predictable training efficiency. If Meta adopts Google’s accelerators, it would mark a notable shift in how AI heavy companies diversify their compute sources.

Growing momentum behind Google’s AI hardware strategy

Google has been steadily expanding its presence in the AI chip market. Earlier this year, the company signed an agreement to supply Anthropic with up to one million chips. This deal was a significant milestone, indicating that Google is ready to support external customers with hardware at a scale once reserved for internal operations. The new report positioning Meta as a potential buyer reinforces that trend.

Alphabet’s stock performance also reflects investor expectations that Google’s AI hardware and cloud initiatives will drive long term growth. As trading opened in New York, Alphabet was poised to reach a market valuation of four trillion US dollars for the first time. The milestone underscores how central AI infrastructure has become to the financial outlook of global technology firms.

Nvidia, meanwhile, saw its shares fall about four percent in premarket trading following the news. The reaction highlights market sensitivity to any development that suggests erosion of Nvidia’s near total dominance in AI compute. The company remains the clear industry leader, but rivals are increasingly visible.

Competitive pressure reshapes the AI hardware landscape

The intensification of competition between Nvidia and Google is reshaping expectations about how the AI hardware ecosystem may evolve. For much of the past decade Nvidia’s GPUs have served as the backbone of AI research and deployment. Their versatility, high throughput and strong software support ensured near universal adoption. But Google has spent years refining TPUs to specialise in specific classes of deep learning workloads, particularly large scale training.

The potential participation of Meta, one of the world’s largest AI developers, gives weight to the notion that TPU architecture is now sufficiently mature for the demands of large model development beyond Google’s own ecosystem. If Meta begins integrating TPUs across its global data centers, it could encourage other companies to explore alternatives to Nvidia, increasing market competition and accelerating innovation.

Implications for AI compute supply and market structure

The rapid rise in AI computation requirements has placed enormous pressure on global chip supply chains. Companies are racing not only to innovate but also to ensure guaranteed access to hardware. Google’s emergence as a viable supplier offers hyperscalers and AI labs additional resilience. For Meta, adopting TPUs could ease reliance on a single vendor while providing cost or efficiency advantages.

The rivalry between Nvidia and Google also benefits the wider industry. Competing architectures encourage diversity in chipdesign, optimisation techniques and software frameworks. They also help prevent bottlenecks that arise when the entire industry depends on one supplier.

A turning point for AI hardware competition

The reported Meta and Google discussions mark a potential inflection point in the global AI chip race. While Nvidia remains the leader, the landscape is becoming more competitive as large technology firms seek alternative paths to compute scalability. With demand for AI models expected to grow exponentially, the future will likely be shaped by multiple architectures and an increasingly diverse hardware ecosystem.